I've accumulated quite a lot of nerd automations in my tech stack, I'll try to give an idea of what I've done up to this day

For the Cloudron instance I run

- A cron job that monitor disk usage, using bash +Cloudron API, it will alert me via email and ntfy when any folder usage > 75%.

- A cron job that checks if some apps time out and restart them via Cloudron API. In bash too.

DNS Monitoring

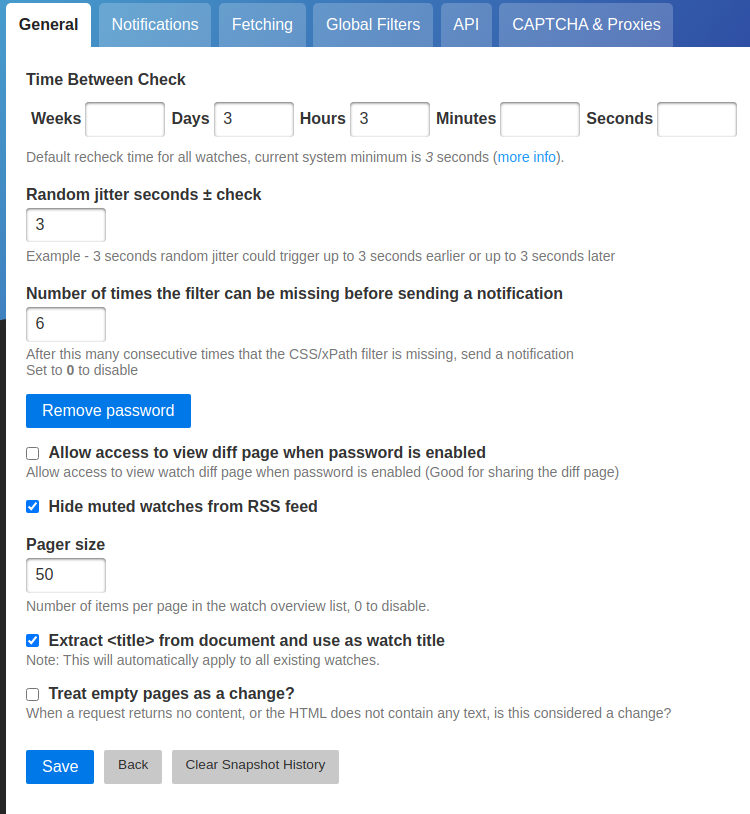

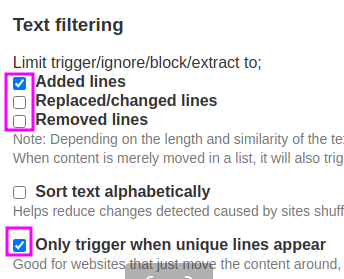

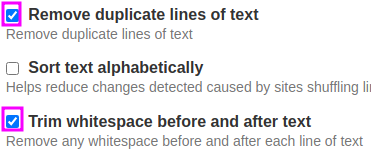

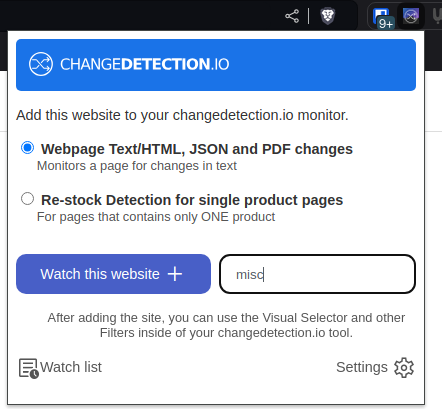

- I take a snapshot of my Hetzner DNS configs every 5 minutes and watch frequently for diffs using Changedetection.

Uptime monitoring

- I'm using Uptime Kuma to monitor the status pages of several services/APIs I'm relying on (Dropbox, OpenAI, Mistral AI, ...) as well as my own self-hosted apps. I get ntfy alerts in case any is failing.

Feed generators

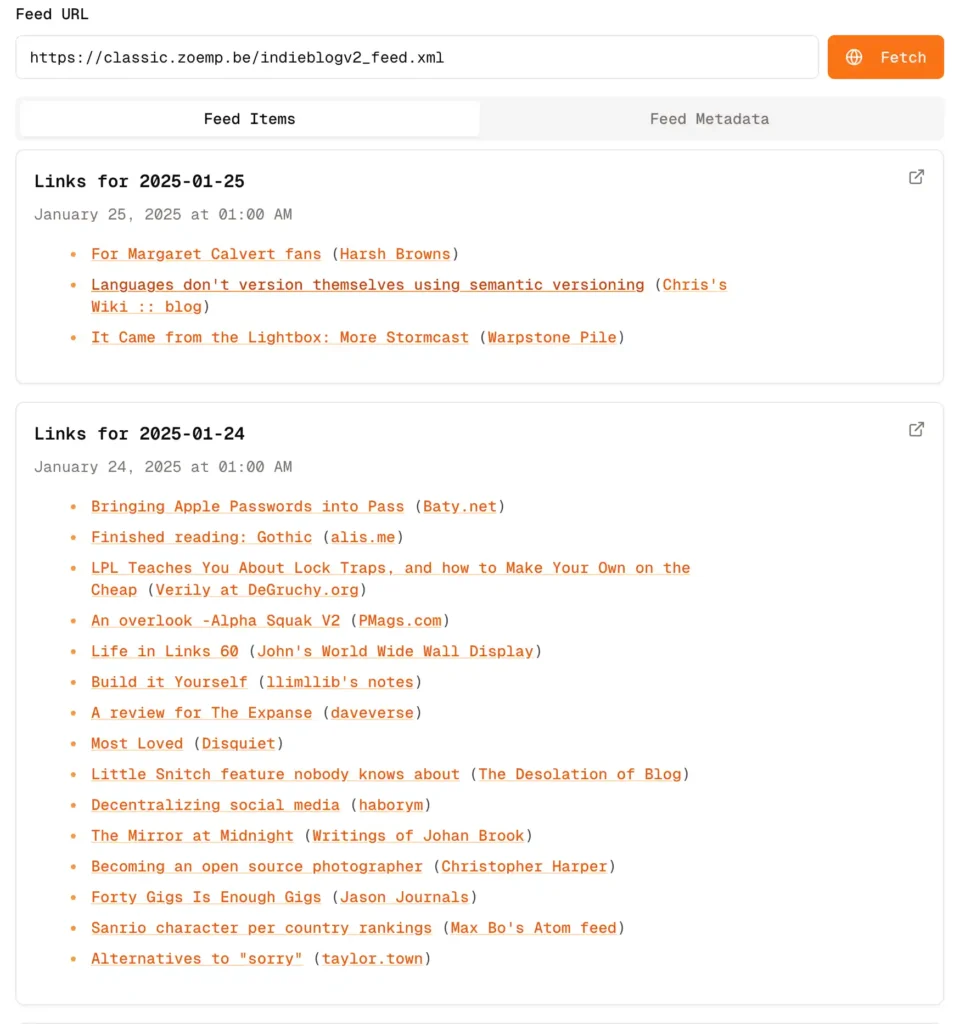

- A weekly summary of https://khrys.eu.org/revue-hebdo/

- A daily top 10 for new entries from https://indieblog.page/

- A weekly reminder of kids friendly events happening nearby, based on https://out.be/.

Files Syncing

- A cron job syncing my .torrent files from Dropbox to QBittorrent, using rclone

- A cron job syncing my downloaded audiobooks to AudioBookShelf, using rsync.

- A cron job syncing my downloaded ebooks to Calibre, by uploading the files to Calibre API, using bash.

Music management

- A cron job syncing my downloaded music (torrent) to my main Music Library, using rsync.

- A cron job verifying the quality of my Music Library content using mp3val and reporting for corrupted files via ntfy.

- A cron job verifying the quality of my Soulseek download folder using mp3val and only moving the verified ones to my Music Library.

- A user script integrating with ListenBrainz/LastFM scrobbler for when I listen to live radios from RTBF (they use radioplayer technology).

- A user script to filter automatically the search results within Soulseek (web version running on Cloudron).

Photos management

- A cron job that syncs my photos library between Dropbox and Immich, using rclone, but only for pics and videos under a certain size.

- A cron job that generate Immich album only made of pictures of specific persons.

- Scripts that I run ad-hoc, using ffmpeg, to compress my pictures, videos, fix their EXIF date at need.

- Scripts that I run ad-hoc, using Syncthing, to remove all pics/videos from my Phone (WhatsApp and Camera folders) and move them to Dropbox, before I compress and triage them. Anything on Dropbox is then sync to Immich, so that's how I keep my phone clean.

Emails management

- A script which checks for invoices (with attachments or downloadable links) in my emails and sends them to my Dropbox forwarding email, which in turn backups those attachments in a specific folder which can be treated.

Freelancer paperwork management

- I have several scripts to rename my receipts and invoices with the right date, invoice nr, provider and organize them per year/quarter/month, it's done in PHP.

- A user script to fill my timesheet automatically based on my declared days off.

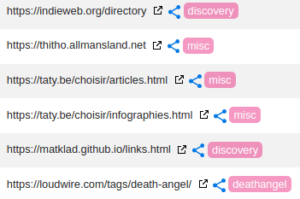

Web curation and bookmark management

- A cron job that will browse my recent Shaarli shares and, when needed, add tags, HN Thread links, Web Archive link, and a summary. It's done in Python.

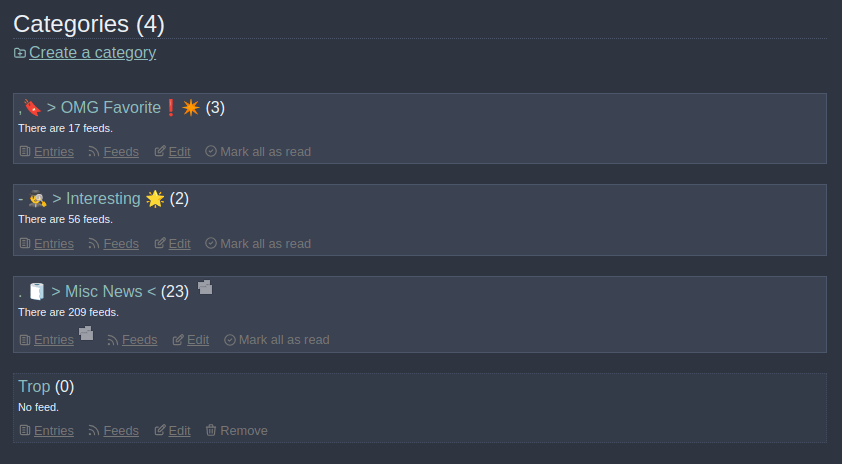

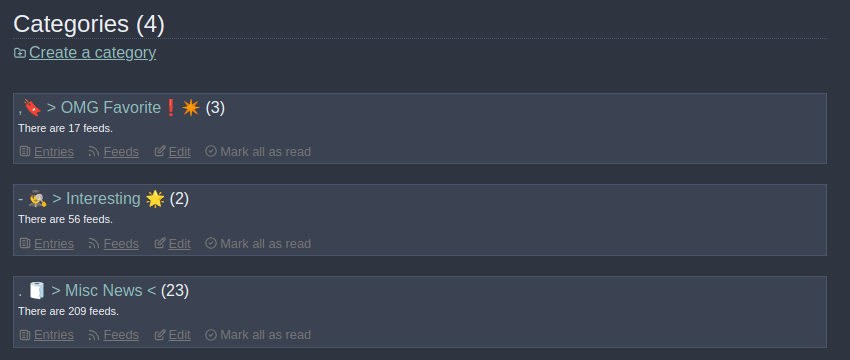

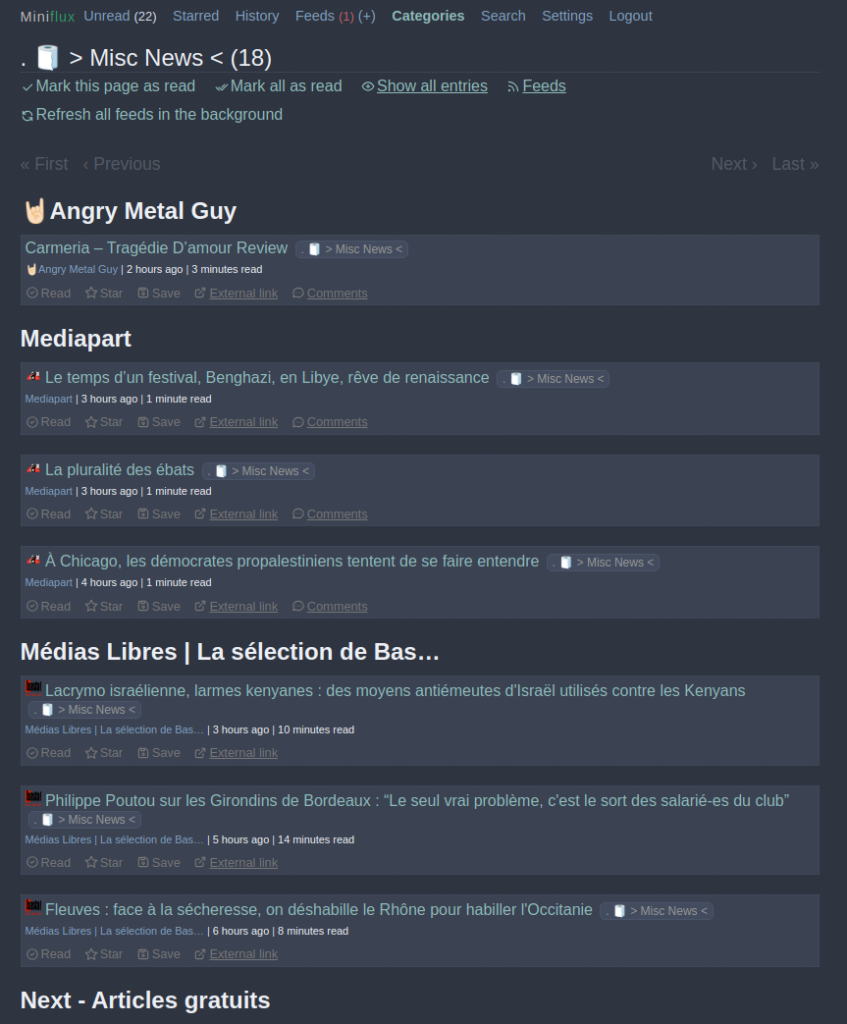

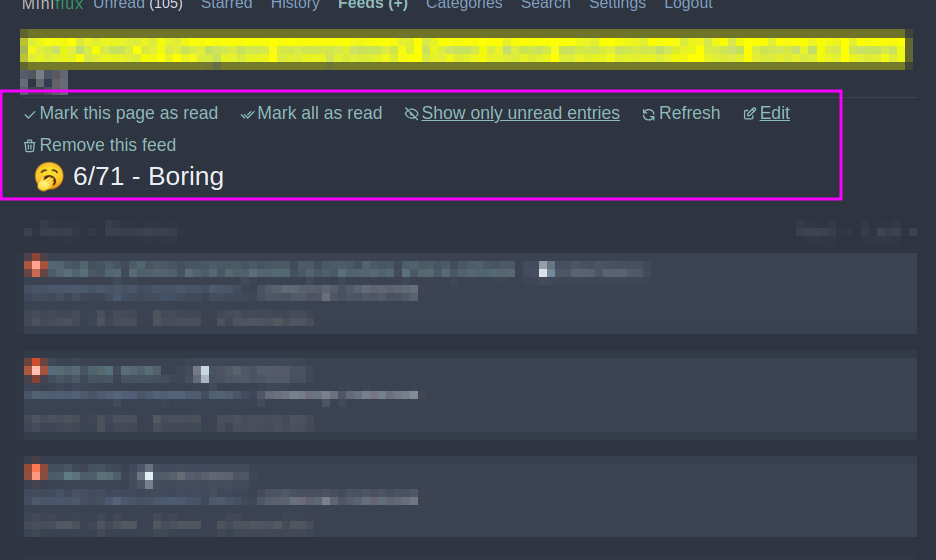

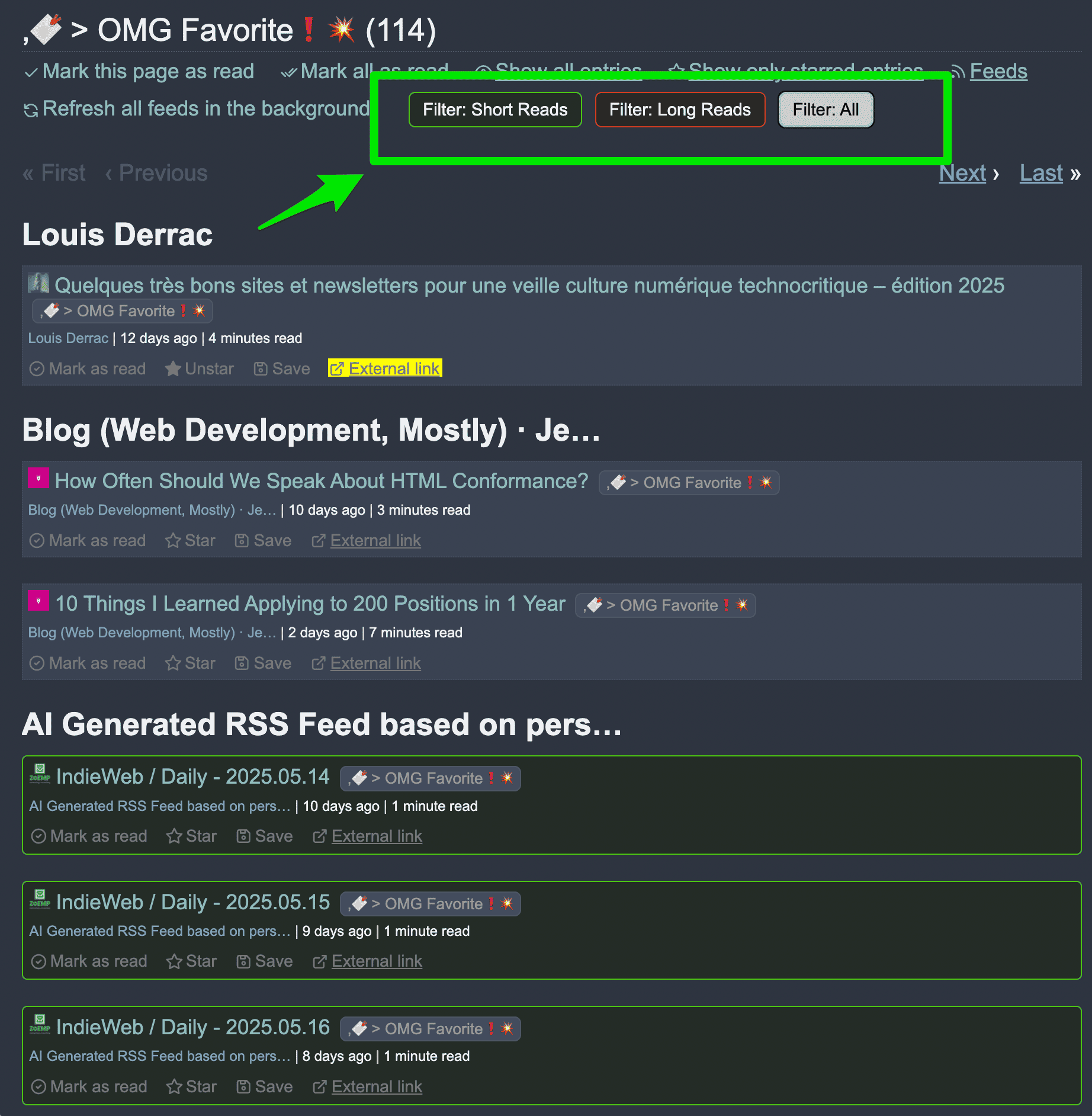

- A cron job that will browse my Miniflux unread entries and mark as read the ones that I will probably not care about. using Mistral AI.

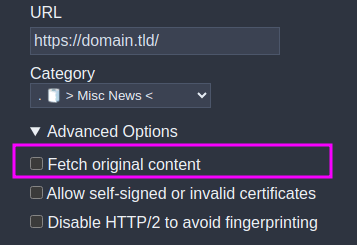

- A cron job that will browse my Miniflux unread entries and send me an email with the unread entries summarized and grouped by feed, a bit like feu Subworthy.com (by Phil Stephens) was doing around 2022.

- A user script that will add TLDRs buttons at the bottom of my Miniflux entries, so I can get a quick summary generated by Mistral AI, at need.

- A user script to warn me on any website if there is a Hacker News thread for the page I visit.

- A user script to highlight and extract all top links from the current Hacker News thread.

LinkedIn management

- A user script that adds a reply generator in LinkedIn conversations, using Mistral AI.

Obsidian Backups

- I'm using aicommit2 called from Obsidian Git plugin to generate meaningful commit messages about what is being backed up.

This looks quite a lot, and that's not all.